- Development:

as you may have seen everything around us is computerised, and the interaction that occurs between computers and humans seems flawless most of the time as computer understands our orders and the human understands the computers instructions, we certainly think that for us to understand computers and for computers to understand us is normal, however this was not the case in the early stages of technology and it is thanks to the constant development of HCI over the past 20 years that human interactions with computer has come so far where we 70 percent of the global population know how to interact with computers even though we might not have any basic knowledge of computing.

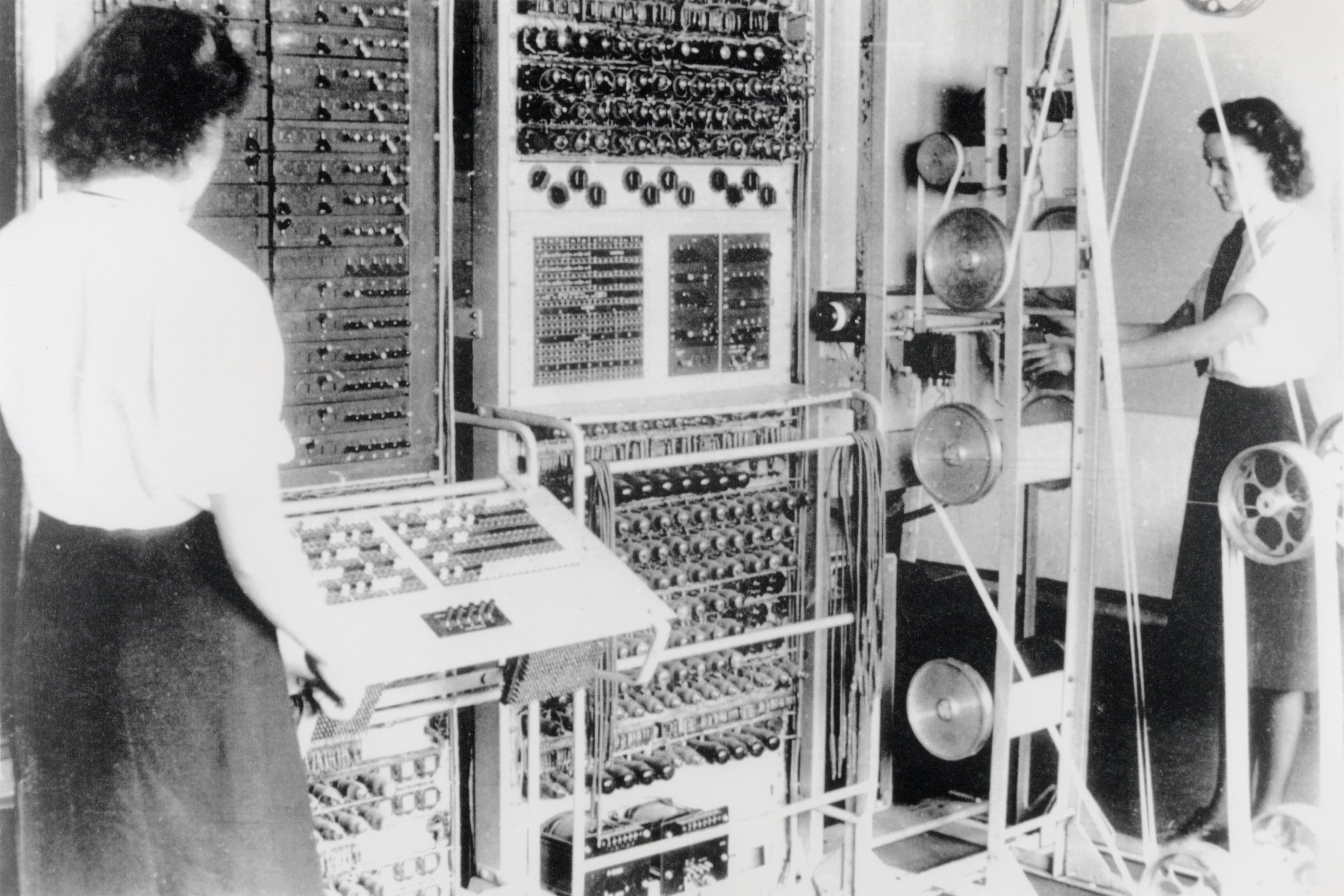

The first kind of HCI surfaced around the 1980s when personal computing started showing up into the mainstream society, where homes and offices started buying personal computer for personal and commercial use, this was first time in history that sophisticated and advanced technology were made available to the mainstream consumer for daily uses such playing games or typing up word documents. The access to mainstream society of computers was also made possible thanks to the computers parts and equipment’s becoming cheaper and smaller in size as once upon a time a computer was room size. The introduction of the personal computer to the mainstream society it meant the computers needed to be built and designed in a way that less experienced users can interact with it easily and a daily to daily basis, this then increased the demand and the need for human -computer interaction software’s that allowed less experienced user to interact with computers and their equipment’s.

- Early designs:

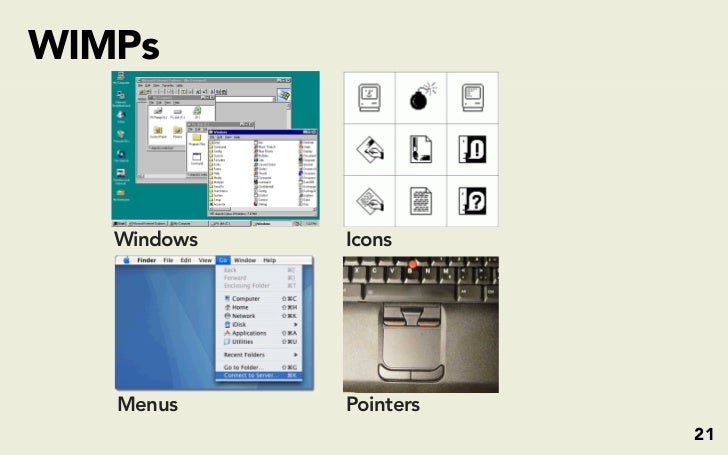

One of the earliest design of GUI which was made to help human-computer interaction was made by Ivan Sutherland, whom developed a program based on computer graphics called Sketchpad which was created by taking inspiration on how human learns. This simple GUI system leaded XEROX to develop their own GUI in the early 70s, which based their GUI model on the WIMP model.

Around the same time apple also came up with their own idea of a GUI which was also based on WIMP , however apple successfully also added brand new hardware to enable easier interaction between the human and the computer. Thanks to apple now users were able to use the mouse move and locate document click on them to open them and also drag and drop document to the bin. Even though we might think this is a simple concept nowadays as it comes naturally to us to interact with the mouse, it was revolutionary concept in the early days of personal computing which shaped the computer of today, which eased the interaction between the human and the computer. Nearly all early HCI design were limited by the hardware that was available at the time.

All personal computers at that ages were mostly designed to assist the developer and not the user as users needed to type in huge amount of codes just to do basic tasks such as log in, this made it difficult for the user to interact with the computer as huge sums of code needed to be executed to just perform basic tasks(on the other hand the coder found this tasks simple to do as he has expert knowledge on how interact with personal computers). Examples of a computer that was designed around the developer was the popular Commodore 64 which had no GUI at all and user had type in specific lines of code to log into the system.

- Extended command line (CLE):

CLE used to be widely used by any users who interacted with computers as before GUI the only way user could interact with the pc was through the CLE. Once in the CLE you would had to enter a long series of codes in to the computer to which would instruct the computer on what events should the computer execute. Through the lines of codes the user was able to instruct the computer to move files, rename them or delete them, the same concept is still in motion to these days however now we are able to instruct the computer to do the tasks much easily thanks to HCI which allows us to communicate with computer without the needs of specific codes and instruction once upon a time needed. One of the most famous command line at the time and still is the MS-DOS which once opened the C:\ prompt will appear.

- Graphical User Interface (GUI)

GUI are the HCI methods we use nowadays to interact with our computers and apps that are inside our devices, Microsoft windows and all related application software such as MS office rely heavily on the use of GUI, for you to be able to interact with programs like word and PowerPoint you need to go through the GUI which is the HCI that eases human computer interaction. GUI interface in the modern society are vital as they allow user to access easily to access and execute commands on programs with taps of buttons or clicks through dialogue boxes.

- Web user interface (WUI)

These WUI is able to generate webpages which can then be used to interacted with the user through input from the user or by receiving information’s from the browser. One of the widely used programming languages for this kind of interface is JavaScript. The WUI interface allows us to create a separate program which allows the users interact with the browser in the present and now without the need to wait for a response.

- Visual systems

Thanks to the advancement of software we are now able to create visual systems and software that are able to reproduce 3d pictures and products , a huge example of this is GOOGLE maps which now reproduces their maps in 3D which make the map more detailed thanks to visual system we are now able to perceive depth on maps, another example of visual implementation would be the picture of unborn babies through scans. Overall 3D visual system are being developed more every day 3D movies being showed on daily basis and 3D display for TVs and gaming consoles such Nintendo 3DS.

Other types of visual systems that are getting more and more developed day by day are holograms, now you can actually buy Microsoft-s HoloLens which is mixes reality with holograms to give you a full experience of virtual reality. LCD screen are another type of Visual system and currently you can clearly see the surge of 4K tv-s which are the trending in the television market.

- Specialised interface

Once we started to understand HCI and we have started to create computers and equipment designed for the user which enables them to interact at the best of their ability, we started studying our audiences and between our audience we have found that certain users in the mass

population cannot interact with the mainstream software or keyboard and for them accessing technology was harder then others as not only they might be blocked by their mental capability of understanding technology some of them where also blocked by their physical capabilities. This is why development of specialised interface is vital as it allows individual with physical, visual and hearing impairments to interact with computers. Big examples of how specialised interfaces are being created for disabled user can be Stephen Hawking whom was diagnosed with motor neurone disease which slowly led him to paralysis, however he was still able to interact with

humans and computers thanks to the custom built communication hardware and software which were specialised interfaces that enabled him to interact with computers and humans. Therefore there is huge varieties of assistive and adaptive equipment’s and technologies that are cheap and easily available to support and aid users with disabilities. An example of this would be the Tracker ball which can be used by user whom might have RSI or arthrosis

- Present and future development:

(realistic virtual reality is also inside this paragraph) Other kinds of developments that are happening all around us are robotic systems and 3d image rendering which is getting better day by as we can easily see in the gaming industry the computerised images are getting realistic day by day as sometime nowadays is impossible distinguish between the virtual world and the real world thanks to the amazing job 3D animation is capable to do. Not only is helping the gaming industry to close the gap between reality and virtual reality, it is also helping other industry as such as the aviation industry which now uses virtual flight training simulations before they allow they pilots to train with real planes, this is reducing cost effectiveness , the same technology are being used by the medical industry which performs virtual surgeries for training purposes using HoloLens or Oculus rifts which are VR headset which gives the user an immersive experience in the virtual world sometime making the user to get confuse between real and virtual this good as the junior surgeons will better equipped for the real world surgeries.

Another development that is being developed day by day and becoming better and better is speech-activated software which are now all around us and getting better and better day by day, these software’s have made huge steps forward since the 1990s, when the user had to be very clear and speak slowly to instruct the computer to perform tasks via voice. Now days Alexa and Google assistant are able to understand more then 20 languages and the different accents that user might have and thanks to artificial intelligence the computer is learning more and more about the way you talk and speak and developing and improving their speech recognition and accuracy every minute.

- Thought input

As you might know computers are very good at making decision for us we are now giving out important roles to computer whom decides for various things for example, thanks to computers and artificial intelligence and their algorithms half of the banking system is computerised and if you ask for a loan or mortgage is not the staff who will approve your loan but it will be the computer who approves you loan after making sure that your details matches all the criteria for it allow you a loan. Allowing computers to make decisions for us is better as their choices are not conditioned by our human emotions and feeling, however the downside of this is that they might they see everyone’s as same and they are not able to consider people positions, emotions and feelings.

However there are still sectors in the working industry where human commands is needed and as humans and advancement of technology we want to get to point in the future where we are capable of giving commands to computers just by thinking of them. This kind of technology would be one of the biggest game changers in many sectors and especially will help the users who might have speech and mobility problems as this kind of technology would allow the users to order the computer to execute sets of tasks just by thinking of them. This kind of technology is already available in the air force as the pilots are now able to lock the missiles to their target just by thinking about them, which allows them to do split seconds decision and reduce time taken to manually lock a target.

- Society

We live in a society where computers and technology is an integral part of life, computing has become part of our life and many things of our life are dependent on computers, some of the major sectors in the world are dependent on computing for example the baking sector, transport communication and hospitals; all of these sectors rely heavily computerised.

- Improve usability:

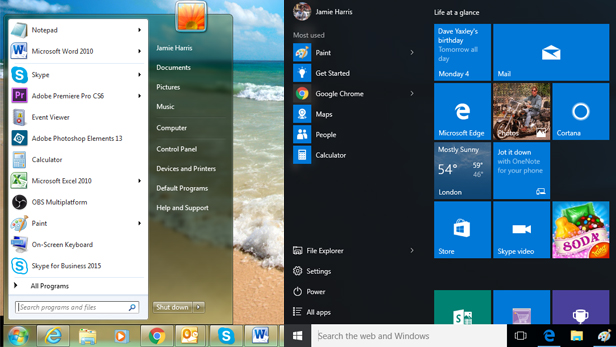

Thanks to HCI and the constant development common user are now able to interact with technological material with ease and feel comfortable relying on it, this is thanks to big company like Microsoft Apple and Samsung being always consistent with their product user interface designs, GUIs toolbars and menus. This consistency of having the same layout and the same features means that the user over time gets used to it and fewer technical skills and specialised knowledge is needed as over time the interacting with device comes natural to the user as he is already use to it. Therefore for example if the user is using Windows 7 and he upgrades to windows 10 he will still feel comfortable interacting with it as he is already familiar with the commands and the layout, which will allow him to be comfortable using the device and on the he will also bee keen to explore new features which over time he will get used to it and familiar with it.

By having consistency in in the product design layout and software over a long period of time allows the user to get comfortable with the product and understand the product better, this then increases the user friendliness of the product as the product is perceived as something that the user is familiar with and finds it comfortable to interact with this then simplifies input and output of between the user and the computer as both have mutual understanding with each other.

One of the huge advantage of technology and HCI is domestic appliances which are a vital part of our lives, domestic appliances are needed to make our life easier and because it is used by everyone the product needs to be designed around the user and when designed the designers and programmers will need to take in consideration that this appliance might be used by someone whom might even not know how to a open PC, therefore in the technological sector domestic appliance are the most simplest and are the most user friendly as all of them are designed in the same way.

Therefore domestic appliances and their displays are very simple to understand and interact with, these days some of the appliance do not even come with a manual as these appliances have been into users home for so long that most of the users naturally knows how to interact with them , however still manuals are great to get basic knowledge of the product therefore they are still provide in virtually or hard paper.

- Specialised interfaces:

New and modern interfaces are being developed everyday to allow users with sight and vision impairments to interact with computers:

- For example with physical disabilities can easily use Voice recognition software to interact with computers, for example one of the huge thing is the voice recognition software can easily convert speech into digital text which means user can just talk to the computer without the need from him to actually type in the content. This is huge step towards computers being accessible to everyone no matter of their abilities and this step could be taken thanks to the constant development of voice recognitions software that has been going for years and years, and now we find our self at point where the voice recognition software’s of companies like google are able to understand what you are saying in different languages this then allows users from different countries to interact with the software. On top of that this voice recognition software is being inserted into document typing software as an alternative way to typing, allowing more and more user the access to be able to interact with word document which 10 years a go was something that we never imagined was possible

- We have now also software’s that do the opposite of converting text, these new software’s called speech synthesis are able to convert text to speech, this then allows the computer to translate the text into speech for user who might have visual impairment, this is another huge step forward as we are now able to provide service to a new range of users who 10 years a go where not able to interact with technology as there was no technology that helped them with their problem. However this technology has a long way to go as for now this technology has still it’s flows as when translating the text you might find the speech robotic and sometime incomprehensible, in the future our aim is to create a software that is more human like and the voice is more human and more friendly. Apple and some android phone uses this technology as screen reader for user with visual problem this kind of readers are turning out to be very useful and allowing more and more users with visual problem to interact with technological devices.

Above we just gave to examples of specialised interfaces however there are more specialised interface out there helping whom have different characteristics which are helping millions and millions of user to get access to technology.

- Interfaces for hostile environments:

Thanks to robots and remote control device we are able to go to very hostile environments and perform hostile tasks without risking human life, for example remotely controlled robots are being used to locating landmines or landing rockets to mars which were not possible 20 years a go and human had to risks their life to manually perform these activities. Thanks to robotics and remotely controlled devices we have now reached the hostile and dangerous environment without risking any human lives, the robot is easily able to survive in mars and is able to gather scientific information on the mars environment with ease through data logging which is then being sent periodically to humans on earth.

- Complexity

On the same time very complex GUIS are being created for important jobs and roles and also for games to make the game as real like as possible. Examples would be the Fly-by-wire system that are being used in aircrafts to replace more and more human actions, and in the gaming scene head up displays which are called VR headsets are being used to create a better virtual reality where user are tested to the extreme on what is real and what’s not through games such as fallout 4 VR.

Right now as a society we are divided as the older generation is still rejecting technology as they see it as threat as it is changing the world around them which they are not used to it, also due to their complexity the older generation feel that technology is way too difficult to interact with and they think they are better off without it , however the advancement of technology as means a better future is ahead of us, as technology and HCI is advancing more and more it is helping the quality of life of million of users. I think in the future technology will be able to reduce the gap between the normal users and users with disabilities, technology will be more and more accessible to every member of society and every member of society will be on board with technology and be more adaptable more open minded and more welcoming to new technology as they will be already well used to and exposed to new technology coming every 2 to 3 years. However what excites me the most about the future is the fact that we might be able to see users with speech and mobility impairment being able to express their thought and be more involved with the society thanks to technology which are enabling peoples with disabilities to be more part of the society through specialised interfaces that are designed for them to be able to interact with computers and with society through computers.

- Economy

One of the major sector that is benefitting from the rapid technological development is the financial sector thanks to technology companies are cutting down employees and replacing them with robots which are more efficient and are able to work at the same rate all day long also because they are robots they do not have to get paid which means they save money on salaries.

- Productivity per individual

Having specialised computers and software’s are a vital part of increasing user productivity as with the help of specialised products and equipment’s users are able to perform better and more efficiently with less margin of errors. For example when interacting with huge sums of data to make graphs we are using wizard were quickly input sets of information which then reproduce quickly an output this is done because the wizard take 200% less time than a human, human errors can be also avoided and overall less expert humans can perform the same job which then make it again cost effective. Again this decreases the time that is taken to come up with results and outcomes and also on the same time it makes sure that humans errors can be avoided as the complexity of the inputs are reduced. Overall this then increases the individual and the companies productivity.

- Increased automation

One of the principal thing to always take in consideration when developing HCI is how to reduce user input, this then automatically simplifies the amount of expertise or knowledge he might need to interact with the device or the product, making the product more accessible to a greater audience, as less expertise is needed to interact with the product. Text readers is an simple and effective example of an automated HCI which allows multi tasking for example if a message is has been sent to you the device will read you out the message in somewhat inhuman voice (however getting more and more closer to human voice) while you are performing other tasks. HCI automation like these are helping us perform and increase our productivity for example we are able to talk while driving thanks to voice automated cars which allows us to command the car to call someone as the phone is connected to the car without the need of picking up the phone.

- Automatic judgement input

This is another huge evidence of automation is getting the hold of industry day by day as they want be efficient and precise with their product increasing their profit percentages. This kind of automatic judgement inputs are being used to reduce time and expenses, a robot is much quicker to judge a product for example by it’s size weight and height then a human, this then decreases time taken if a human is able to test or verify ten products the robot is able to test and verify 20 product in the same time slot, again not only humans are getting replaced because they are not efficient as robot, robots are also cost effective and able to judge inputs way better then human and come out with far better results which then in turn improves the company’s financial situations.

- Voice input

Voice input is another huge industry, thanks to the development of HCI and AI intelligence call centres are replacing humans with voice input assistants; as you might know the more repetitive is the job the worst the human performs as it he might get tired to ask the same set of questions over and over and also mentally frustrated which might lead him to lash out to the customer which is something that we have seen in the past, this why owners are replacing humans with computer voice input software to tackle human emotions as computer do not get bored to do the same task over and over as they are designed and created to do that particular task for example ask a set of particular questions over and over to many users.

- Thought input

This kind of software’s are also getting vital in our society, as computer are getting more and more accessible to different users thanks to these kind of software; we have already talked above of how the development of thought input software are helping user with disabilities to interact with technology, as thought input software are now allowing different users with different capabilities to communicate their thought through these software’s with the external world easily, as they might find speaking or communicating with gestures hard a common and powerful example of is Stephen Hawking again.

- Varied working environment

Since the introduction of the fax machine in 1980s the working environment is constantly changing the way we work and how we work, as days goes we are getting more and more reliable to computer and we can easily see that when companies like NHS, if their data bases goes down the whole hospital gets paralyzed and as user have no access to data or any kind of patient record, which 20 years a go was not a problem as everything was still being recorded on pen and paper.

Therefore today not only the office environment is changing but also the tools that we use are changing, for example thanks to the latest mobile phones and mobile communication services we are able to work remotely this means for some employees that they do not even have turn up to office and do everything from any other location they prefer. The range of mobile communication that is available to us is making our working environment more versatile then ever thanks to the different mobile communication such as 4G, 5G cloud storage , smartphones, laptops and WIFI, which allows to work from anywhere in the world.

In a lot of sectors technology is having a lot of beneficial impacts, and is good because some of the tasks works that used to take us ages for example creating financial graphs now takes us only few minutes which is good. However i think technology is being pushed in way to much into our life this is making as to rely more and more on internet and technology in general that in the future if major outages or cyber attacks occurs we will be in worst positions then ever as most of our sectors are now fully computer based. We already have few examples of this such as the NHS, where few years ago one attacks shut down the whole NHS network system. Due to technology more and more industry that once were at the top are falling down one of the major example would be block buster, and more industries will continue to fall down however by the economy pushing more and more technology inside their environment we will have whole new range of jobs in the future that we never thought might exist for example one of them is social media marketing which 20 years a go was unheard of, therefore the downside of economy depending on technology is that attacks on systems and networks will have brutal effects on the networks and companies, and jobs sectors that once where stable will be fading away and the benefit is that a new job sectors will arrive with new opportunities I think that we are in the transitional point in time where our society is semi tech savvy which will change in 10 20 years and our jobs and economy will become again stable

In a lot of sectors technology is having a lot of beneficial impacts, and is good because some of the tasks works that used to take us ages for example creating financial graphs now takes us only few minutes which is good. However i think technology is being pushed in way to much into our life this is making as to rely more and more on internet and technology in general that in the future if major outages or cyber attacks occurs we will be in worst positions then ever as most of our sectors are now fully computer based. We already have few examples of this such as the NHS, where few years ago one attacks shut down the whole NHS network system. Due to technology more and more industry that once were at the top are falling down one of the major example would be block buster, and more industries will continue to fall down however by the economy pushing more and more technology inside their environment we will have whole new range of jobs in the future that we never thought might exist for example one of them is social media marketing which 20 years a go was unheard of, therefore the downside of economy depending on technology is that attacks on systems and networks will have brutal effects on the networks and companies, and jobs sectors that once where stable will be fading away and the benefit is that a new job sectors will arrive with new opportunities I think that we are in the transitional point in time where our society is semi tech savvy which will change in 10 20 years and our jobs and economy will become again stable

- Culture

Thanks to HCI and the way they are getting designed we breaking new barriers and humans are getting closer and closer then ever and an example of would be google translator which allows two user from different countries whom have two different languages to talk to each other thanks to the HCI being able to translate the foreign language to their native language for both users, this is only one of the examples of how HCI is bringing everyone together.

- the ways in which people use computers

technology not only is helping us with our work but is also becoming part of our culture, our last generation were reluctant to use technology but the current generation has embraced technology and became reliant on it, technology has shaped a lot of thing in our culture over the past 10 year for example we now text more then we speak on the phone we see texting as an alternative way to chat to someone and confirms meeting. We now use smartphones as our personal diary, alarm and source of entertainment, through a smart phone now I can set up an alarm on when to wake up on the same time, make note of appointments I have tomorrow and things I need to do, not only it works as diary and an alarm it also works as our source of entrainment as we are able to access all the social media websites, streaming website such as Netflix which allows us to view movies on the go and playing games without the need of an actual console as these smartphones are becoming more and more all inclusive as now we have reached a point where we do not even carry a oyster card or bank card thanks to the NFC contactless technology.

Same things goes for the laptops which getting smaller and smaller and lighter in the same time allowing more user to be able to carry it around and allows them to work on the go and also on the same time it is a source of entertainment on the go as you might be sitting on the plane a play game on it or watch movies.

- Domestic appliances:

In this sector also everything is getting a touch of technology we now have smart coffee makers, cattle’s boilers and lights vacuum cleaners which are all automated and can be commanded to perform tasks remotely through smartphones and apps, an example of it would be commanding your coffee maker to make you a coffee in the kitchen from your bedroom through your smartphone app. overall all domestic appliances at this current stage are using some sort of technology to function some of them are using les technology and some of them are fully embracing technology on the other hand we came to a point that the most menial domestic task can be automated.

- Psychological and sociological impacts of IT

The development of HCI not only is having a positive impact but on the same time is having a negative impact too as the huge variety of work that once there was in the job sector is reducing day by day due to automated technology which is now doing the humans job 10 times better then the human, and the work you might find around now are les and less specialised then ever this kind of process is called deskilling .

- Impact of deskilling

As mentioned automated equipment’s are taking over the major industries, these automated equipment’s are replacing the skilled employees which once were vital for the functioning of the companies, now days these automated equipment’s are reducing the complexity of the work, this then leads to the devaluing of workers as work the workers use to execute is being done 10 times better for way cheaper. automated machines are also taking away the need of specialised trades such as milling and wood machining as turning to automation enables the companies to reduce cost easily as less skilled workers needs to be employed.

Thanks to automation and at the mass scale these robots are able to produce anything at faster pace this is then shutting down trades such as hand made furniture factories, dress making, tailoring and other industries like glass making. Automated robot are doing all the skilled work reducing the need of skilled workers which mean less skilled employees are needed at work places which automatically decreases the costs for the companies, specialised knowledge is being needed less and less too as employee just needs to give instruction to the machine which will perform the job.

- Impact in the developing nation

Thanks to the advancement of technology and the increased demand for them third world countries are getting more and more job available, for example nowadays when you call to a call centre you will most likely find that the call is being answered from some other part of the world this is possible thanks to HCI developments and the world getting smaller day by day. The benefit sourcing product and jobs from outside especially from Asia is huge as the making of the product back in Asia is cheaper and the salary paid to the workers is less then the average pay that any European worker gets this then reduces the cost overall. This is why you might see apple writing that their products are being designed in California and assembled in china as the parts cost less they pay for the workers is less which means more profit is made also means more work for the countries to which the product is being assembled giving the country opportunity an chances to increase and develop their economy.

I think computers and technology has already become part of our culture most of the device we use now are multi-tasking devices which are able to do many things for example once upon a time the phones where just made for calling now a phone once it has become a smartphone it is able to make calls, plan, alarm and also provide with source of entertainment, all of these things can be done through single device now, I think until now we were focused on making our technological devices smart and I believe we have accomplished that as every devices from your tv to smart phone are all smart and able to perform and execute different functions, however what I believe as humans we don-t like always new things however we will get used to our environment being smart as well, as for now smart domestic devices and cars are quite expensive for the general audience however in the future as the technology becomes more affordable we will see smart domestic appliance which works directly from your phone will be something we are used to it an self-driving cars, planes busses will become more and more available and cheaper, these smart equipment will make us even lazier and but will make us more ecological and safe as the computer will be making the decisions for us which will benefit us as they are designed to serve us.

Understand the fundamental principle of interface design

- Perception

Perception of the GUI varies from user to developer, the developer perceives the GUI from the designing point of view, where the developer takes in consideration the importance of the colour that are used in creating the GUI , the correct positioning of the GUI on the user desktop, and overall the developer take in consideration how all the characteristics that the GUI has might effect the ease of use of the GUI. On the other hand the user might not be aware of all the details that developer is aware of as he sees the GUI from the user perspective where he might judge the program on the ease of use and how interactive and visually appealing it is. On the other hand the client for whom the GUI is being designed might not be user which means he will pitch his needs and demands to the interviewer from business perspective and most likely won’t be able to fully represent the needs and demands of the end user. Therefore what we understand from here is that the perception of the GUI varies from user to user depending on what role they play when interacting with the GUI.

However what we perceive in GUI is influenced by the following characteristics:

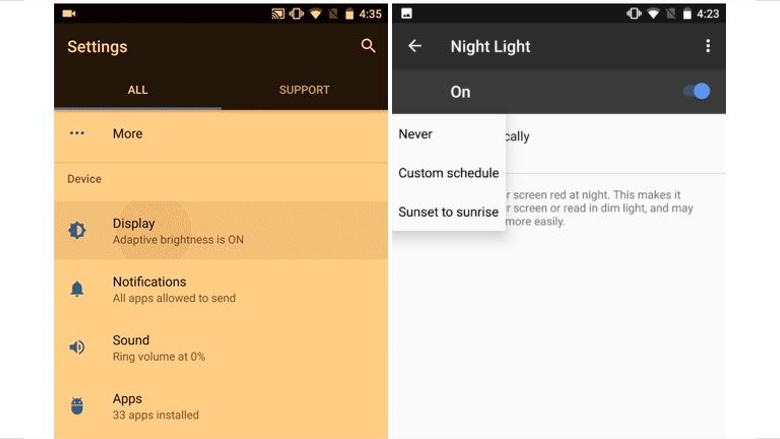

Colour

Is a very important factor in the success of the GUI, when creating a GUI you want to include colours that are well perceived by the audience, so when interacting with the GUI they feel comfortable to look at and interact with. When we talk about colours we ca never get it 100% right as different colours effects different users in different ways however when creating GUIS we try to include colours that the wider audience feel comfortable interacting with. For example while reading some individuals feels that if the background colour is yellow they find easier to read something, whereas the majority of user finds hard to read when the screen displays a yellow background, as the screen to the majority of the user appears as fuzzy, therefore in this scenario we would go with the majority of users likes and demands.

When we talk about from the GUI point of view you have may noticed that Microsoft office has the tendency to use the colour grey as their main colour, and makes use of the colour blue for their title bars and drop down menus. However a majority of the user feels the colour selection to be boring as they see both blue and grey to not to be attractive colours such as red or black, which are colours that make the user feel more attracted and more concentrated however on the long run these sets of colours are not effective as they make feel the users eye feel uncomfortable and if the user finds the GUI design uncomfortable most likely they will stop interacting with the software.

Trichromatic system/luminance

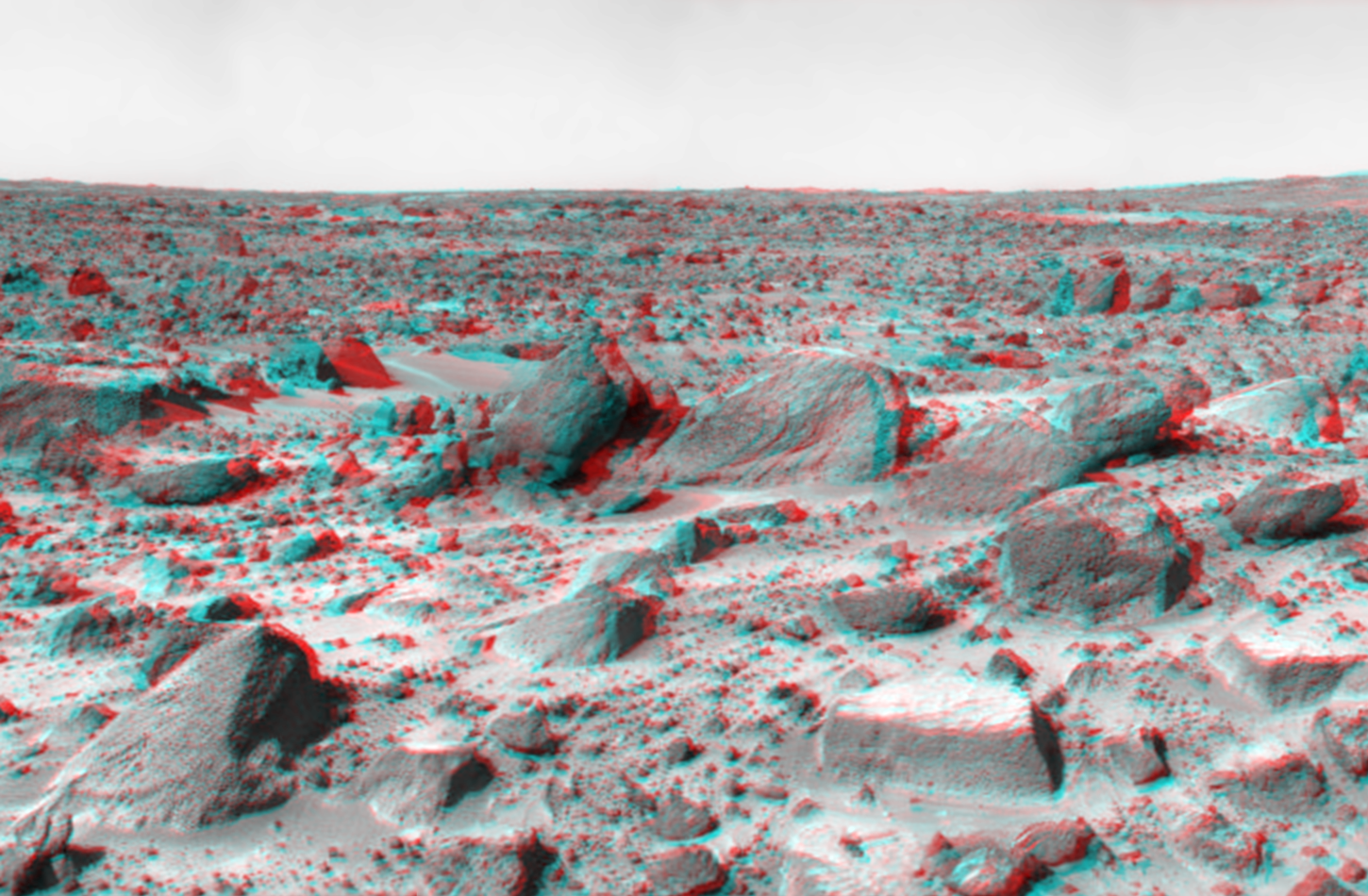

Colour nowadays is not only used for design but is used also for technologies; nowadays you may have noticed that we are able to watch movies in 3D which is entirely possible thanks to the use of the Trichromatic system were they use set of three colours which are considered to be the basis of the 3D vision and these three colours are red, blue and green. These three colours take advantage of the anatomy of the human eye as each of the three colours are detected by different receptors cell in the retina of the human eye, which then tricks the human brain to visualize these videos in 3D.

We are able to manipulate colours to give 3D effects thanks to the constant studies that wen behind this system which defines that the receptors of our eyes perceive these signals as red-green, yellow-blue and black-white. The signals that are emitted by the trichromatic system are considered to be opponent colour channels as they rely information that are related to opposite colours. We use the word luminance to describe these light signals that are emitted from stable surface per unit area towards a given direction.

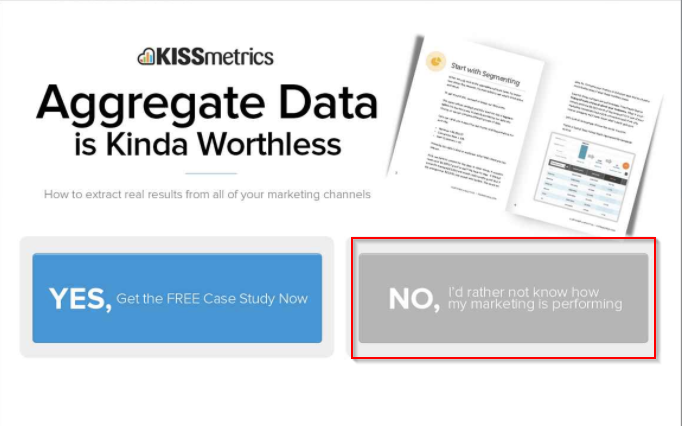

Pop-up effect

This effect is used to catch attention and trick the user on focusing on what the developer wants him to focus on, as the designer might want that particular section of text to pop up from the rest. In more technical terms we call this pop-up effect feature as the Preattentive Processing theory, through which we are able to highlight bits of content that as developer and designer think that needs to be paid more attention than the rest of the material. There are various ways through which this pop-up effect is achieved in your in your product for example it can be achieved by aligning the content differently, or using different shapes around the content you want to pop-out or by making use of colours that make the important content stand out from the rest of the content

Overall for our GUI to look professional we need to make sure that we do not over use any of the features above mentioned, we understand you might want use colours that grab the users attention or pop-up effects more and more as you might want to make sure to keep the user’s constant attention on your GUI, but in order to make sure that your product or GUI looks professional and stylish you will need understands when to use what feature and at what time, this will then make sure your GUI looks professional and stylish and on the same time will make sure that the GUI fits for purpose and catches the users attention when n

Pattern

When we develop a GUI we use the word pattern to describe the template that developer should follow when creating the new GUI. A simple example of developers using templates is the Microsoft corporation which makes sure that the dialogue boxes in all of their GUI version uses the same templates and same layouts, this then allows the GUI to provide consistency and evenness. The use of patterns and templates makes automatically the GUI user friendly and provide connectedness( it is the ease through which the user is able to navigate from one location or place to another, for example it can be between two software application that appears to be very familiar and which then make the navigation and interaction process less threatening).

Therefore consistency and connectedness in the GUI helps the use to feel comfortable when interacting with the GUI, when we use these templates as the base design mostly it includes all the common colours, formats, common menus and options, and common layout. These base templates were built through years and years and are considered to be the base design from where the GUI should start and built on top of these templates this will then make sure that all the old common features and colours are there as well as the new features and the colours.

Pattern perception is a very important stage or process in the perceptions of object and displays, a set of rules knows as the Gestalt laws have been set to describe our pattern perception:

- Proximity: we normally visualize things that are close together as single group

- Continuity : we are easily able to interpret smooth continuous lines then rapidly changing lines

- Symmetry: we are able to observe and view symmetrical shape with much more ease then non-symmetrical shapes

- Similarity: we consider similar object to be in group and objects that are not similar to each other are considered to be as singular or object or individuals( individual example might be the POP-OUT effect)

The below laws relates to similar or common grouping

- Fate: when a set of objects move together we see them as a group

- Region : any objects that are enclosed together in any kind of way we see them as group

- Connected: any object that is connected to each other by a continuous line is considered to be related to each other.

Objects

Every components that are found inside a GUI or any kind or form of image display currently are being built through the use of numerous numbers of individual and separate objects. Each object used is layered and it appears in a hierarchical system in the display. This is very important if the image is not layered and not in hierarchical order then the user might perceive the image as not complete and it may feel confusing therefore for the GUI is very important to be able to determine which image is appearing first on the display and which image is the following image that will overlay the first image.

A common example is a GUI should always appear in front of any kind of text in the desktop, however if the GUI in function is built incorrectly, the GUI might appear behind the text which makes visually hard to read and confusing for the user. These kind of positioning or layering mistakes make the GUI hard to interact with as part of the GUI might not be viewable due to other half of the GUI appearing behind the principal main screen, this will then mean the user will not able to fully access all the range of tools and commands that the GUI has due to the incorrect layering and positioning of the GUI.

Geons and gross 3D shapes

Geons and 3D shapes are both used to deliver reliability and consistency when the developer are creating or generating images. Geons are very cheap to make and not complex at all to design, therefore geons are considered to be simple object which are created through a range of various simple and essential properties, which easily enables the user and the viewer to identify and recognise the image from any angle .

Whereas on the other side 3D images can be easily misunderstood or misinterpreted due to different users or viewers perceiving a 3D image in different ways from each other, therefore when designing 3D images for your GUI you always have to keep in mind how different users or viewers might be viewing the 3D image in front of them. Gross 3D shapes are mostly used in video games and they are not very expensive to make, however with these kind of 3D gross images the cost might increase as the time to design and produce these images increases, also another issue that comes up with these 3D gross images is the cost the speed of running these images which sometime can be very expensive. However sometime the cost is not an issue when we try to reproduce very important 3D images for example when a robot reproduces an 3D image from another planet soil is a priceless image and an these certain cases no matter how expensive it is to make these picture they are priceless and expenses are not an issue.

- Behaviour model

We can deduce an interface or a user will behave through various numbers of predictive models, below we will be talking about some of the most important predictive models that are around us:

Predictive models

When designing systems and interfaces we use predictive models to provide us with guidance, through these models we are able to predict what might happen in the system or the interface, by being able to predict the problems or issues that we might encounter we are able to avoid the lengthy research and the human testing session which are very lengthy process that that always delay the introduction of new interface in the market.

The reaction time that is taken to answer to commands from a GUI will always be different from user to user as different users have different reactions time against commands given by the GUI, however when creating GUI we have to give consideration to if or not the GUI interface will be responsive and aware of time, an example would should the interface shut down if one of the commands or reactions takes too long.

The keystroke-level model (KLM)

KLM has the ability to detect very low levels of interactions that the user has with the GUI, this model able to break down each sets of operations that are executed into individual singular actions, for example pressing buttons on the keyboard, or clicking the mouse buttons, and each of these individual actions are linked with the time, so that the system can calculate the time and order in which the action are being demanded and depending on the calculation the system will respond appropriately.

The throughput (TP)

The throughput talks about the productivity and efficiency of the computer system, TP measures and calculates the speed at which the computer processes a command that is given by the user, other types of calculation that TP does are related to performance in terms of how fast the number of complex tasks are processed, which also called as response time.

Fitts law

This law was developed in 1954 to counteract the general idea that the time for an entity to travel from point A to point B depended on the distance between the point A and B. The Fitts Law contradicted this idea by stating that the time take for an entity to travel from point A to point B depended on the size of the entity that needs to be moved and size of the entity with which it used to be moved . Therefore Fitts law is a method which is used for calculating and predicting throughput beforehand for any kind of GUI system and this done by predicting human motion and movement before hand through time and distance. Each user will have different throughput time as it changes from user to user depending on the various properties that we have stated above.

Descriptive model

There are three different descriptive models that we make use of and these models are:

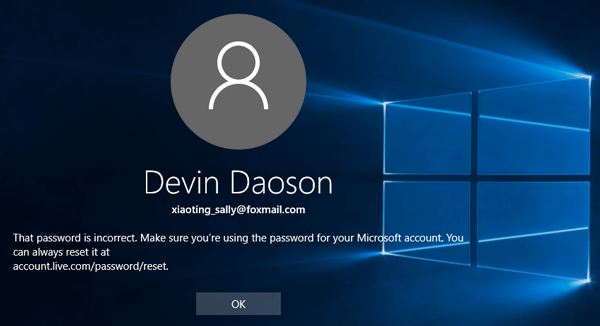

- KAM- the key action model

The KAM models studies and identify how users might expect the computer to behave or respond to commands set by user and studies and identifies how the computer actually behaves in reality on user commands. A simple example would be when a new user tries to log inside the computer they might be expecting the computer to log them in as soon as they type in their username and password however this is not the case in reality , if the user makes spelling mistake when typing in the user name or password than he will not be able to access his profile, therefore this is a KAM scenario where the user expect a reaction or behaviour once he has executed the command but in reality the computer behaves differently as there was mistake while the command was being typed.

Therefore to avoid these kind of issues where the user might expect the computer to execute a specific event whereas in reality the computer is executing another event, the system will try to notify you what is happening inside the computer through a feedback systems such as a loading logo or pop window text explaining what is actually happening in the computer and why is there a delay in the execution of the command that the user has instructed the computer to execute.

An example of this feedback mechanism would be when the user is trying to close a word document but the mouse cursor turns into a loading logo which indicates background processes such as saving of the document are still going on and the closing of the document therefore is being delayed.

Therefore through the KAM model we understand analyses what the user might predict the computer to do in certain situation and what is the computer actually doing in reality .

- Buxton-s three state model

When designing a system interface one of the major aspects that we should consider is how much of a effort or pressure will the user have to put in to execute or respond to a command Therefore we use, this model in the designing phase of the interface to research and analyse on the pressure and the dexterity that needs to be exerted when the user makes these movements through the use of the mice and touchpad which are found inside the laptops or computers.

Through this model we are also able to predict the which component will be easier to use with the interface for example a mouse or a touch pad, however this again depends on the user entirely and how regularly they use that piece of equipment, for example a regular touch pad user might argue that the using a touchpad is much quicker and easier then using a mouse, however the same argument might be in the case of the mouse user which might say that the using the mouse is much easier then using a touchpad.

- Guiard’s model of bimanual skill

This model focuses bimanual skills, as you may know different users prefers different hands when interacting with the interface. Therefore when creating a interface you have to use this model and make sure that the interface is accessible and logically designed for both right handed and left handed users. Therefore if the user is left handed he should not be at a disadvantage or feel discomfort when interacting with the interface as the interface should be designed for both hand users in the same way. However the Guiards model also recognises that humans are not only two handed but they uses their hands differently depending on the activity, therefore when designing the interface makes sure to follow this model and keep in mind human uses different hands for different tasks therefore you should make sure that the user is able to interact with your computer equipment and interface equally with both hands and the interface should be designed equally for both hands.

- Information processing

In this section below we will be talking about how information is being processed and how fast is information is being processed

Human as a component

One of the most important key variable that an HCI design has is the end human user. The human end user aspect is very delicate factor that is very difficult manage and as different end users will have different needs, demands and expectation that they would want the system to meet and fulfil and different user might encounter different issue depending on how they interact with the HCI. Therefore to counteract this challenges for any kind of eventualities we go through the risks assessment process, through which we try to analyse different scenarios that the user might execute for example what would happen if the end human user clicks on option z before option x.

Human information processing HIP

HIP is the way through which we absorb and retain information which is then analysed and used for various tasks where we consider the information absorbed will be useful. The computer hardware equipment works as same as our brain it takes aboard information and then the information are processed by the mind which in this case is the software, and the output is based on the previous gained knowledge and on the ability of us on interpreting information and our will on what to do with the information (which in this case is the decision maker). We are able to improve HIP in many different ways such as by varying the information that we provide, HIP can also be improved by us improving the skills and abilities of decision makers and through the constructions of formal and professional models of human decision making.

Overview of goals, operators methods and selection

We can use the GOMS method to predict the time that might take for an action to be executed or command to be executed or followed. Through the GOM model the designer is able analyse and identify a goal while using a set of operators and lists, methods and the selection in order to carry out the action. This permits the system designer to be able to predict and calculate the total time that might take the system to execute a certain action and also on the same time it allows the designer to identify and detect if any loophole or risks might be encountered. However all of these prediction are only possible if only the user carries out the instruction as anticipated. Then the results can be used for the designer to analyse and suggest which method of user interface is the most reliable and efficient based on the end user requirements, needs and demands.

- Specialist

Nowadays computer systems are accessed by million of users everyday and each and every single user are different from each other and have different abilities, therefore you want to design an interface that is accessible to everyone and to do so you will have to keep in mind that different user might have different disabilities which might put them into a disadvantage then others when using your interface therefore you need to think about how will you enable these user with these different disability to interact with your system and interface. Therefore when designing your interface for example you might want to consider designing an interface that is integrated with speech activating software’s for input and output so that the visually impaired users will be able to interact with your interface, however you might also consider the “what if scenario” if the user also has speech impediment such as lisp in that case how complicated would be for the user to interact with this speech-activated software.

You will also have to consider user who are visually and also on the same time aurally impaired, in this scenario the best choice to go for is haptic feedback technology. Haptic technology is also one of the best option for user who might be seriously physically challenged and are not able to use their limbs. Haptic feed is design is widely used and allows users to be more independent thanks to the use of pointers which allows them to pick and choose what they want the system to execute. Also head-up and remote controlled displays are being used to input or receive output from the system interfaces nowadays.

When designing you interface therefore you-ll have to make sure that these specialist software’s are well integrated inside the interface of the GUI so that it is accessible to as many people as possible and if the interface is designed for as many users as possible with different capabilities more user will be able to interact with it and more it will be used by the wider audience and as a GUI developer you want to reach as many users as possible and in the new generation the GUI which is the most successful is the one who is able to cater and fulfil the needs and demands of most of the users and this only can be done through the integration of specialist software and equipment to your GUI giving the possibility to interact with your GUI to as many users as possible.

Task 3

P3-desing input out HCIs to meet given specification

As you may know we have been requested to design an HCI game prototype below will be showing the design concept we are going to use for our HCI project. For the HCI project we want to simulate one of the actions that occurs very often in every shooting game which is the throwing of the grenade

we want our HCI based game to be able to throw a grenade(or any kind of objects) in the environment that it finds himself inside. as you know for an HCI project to work it needs a human motion input, in our scenario the human motion input will be us using our hands and trying to grab the grenade from our environment and throwing it ( we will be able to do this action to thanks to the leap motion ) once we make the throwing movement of the object the output will be the screen showing that the grenade or the object being thrown in the environment. This is the basic concept and design we will adopt for our HCI project where we as user through the use of a leap motion will use the grab and throw movement as input and the output will be the screen showing the grenade or the object being thrown in the environment around them

P5- test the HCI created

below are evidences in the form of screenshots of the successful tests

p6 document the HCI created

below we will be showing screenshots of the development of the HCI game

The first thing we have done once we have launched unity is importing the Leap motion Core Assets package from the link that the teacher has given, once imported we have opened that package file and then opened up the Example Assets folder under which we found the Capsule Hand(desktop) file once opened, there was a leap motion and virtual hands floating around the virtual environment. Once we have completed the steps above described, we have tested the code or the program by putting our hand hand In front of the leap motion and test if the virtual hands are responding to our hand movements the picture above is the results of the step above described

Now that we have added the essential files and packages and made sure that each of our fingers are being tracked correctly we will be adding a plane to our scenario. You will be able to a add plane for your scenario by right clicking the hierarchy panel and under 3D objects you’ll have to select the plane object, once selected the plane will pop up on your screen and you’ll have to adjust the plane to make sure that the plane is beneath your virtual hands above is the picture of the final result of the steps we have described as you can see the plane is successfully beneath the two virtual hands

Now that we have successfully added a plane to our HCI project we will be adding a sphere to our scenario, we will be doing that by again right clicking on the hierarchy panel and then select 3D object and under 3D object you will find the sphere option which you will have to select. Once the sphere is selected and added to your scenario you will have to resize the scale factor of the sphere to 0.2 for X Y Z sot that the sphere can be easily managed (you will be able to resize the sphere scale factor from the inspector panel). Above is the picture of the process of adding a sphere and below is the picture of the sphere once resized correctly

Now that you have correctly added the sphere in the scenario you will have to apply physics to the object such as the sphere collider so that the virtual hands can interact with the object. After that you will have to import the Leap motion interaction engine package. Once imported the package go to Modules/InteractionEngine/Prefabs and drag and drop Interaction Manager inside your hierarchy panel. Then to be able to interact with your leap motion hands you will have to delete the left and right Rigid Hands found under ‘Hand Models’ in our Hierarchy panel and replace them with our Interaction Hands found in your Project panel. Now that you have finished these steps just drag and drop them inside the capsule Hand left and right.

For both hands to work you will have to lastly drag and drop the interaction manager into the manager field on the right hand side of the screen/interface. Once finished the steps above Go to Modules/scripts/InteractionBehaviour and drag and drop it into the Inspector panel on the right hand side of the screen/interface. The previous step described might prompt the auto fix window if your interaction manager is not found immediately and if the auto fix window is prompted then click on auto fix so that the software will automatically fix any kind of bugs or problems. Then they might want you to auto fix any other necessary updates; once done You will have to try and pick up the sphere below is the picture of me picking up the sphere

Below is the picture of me throwing the sphere into the environment successfully

The effectiveness of the HCI

There are many different ways through which you can measure that the HCI you have created is effective for example you can make sure that your HCI is effective by making sure that the input and output delay is minimal

One of the way that we made sure that our HCI is effective is by making sure that that the objects in the environment are proportional to the virtual hands so that it becomes much easier to interact with and also manage them. Therefore to make sure that the objects(in this case the sphere) in our scenario are proportional to the virtual hands; we have altered the size of the object to make it proportional to the virtual hands, therefore we decreased the sphere size to 0.2 from every direction X Y Z as you want the object shape and size to be the same from each of the directions so that the shape is solid, compact and stable. By doing so we were able to make sure that the virtual hands where able to easily pick up the object from the environment and able to throw it effectively to environment with ease.

Another way that we made sure that our HCI was effective is by making sure that the plane you have created is not way to distant from your virtual floating hand, as this will make the picking of the objects harder (as you know our HCI involves us picking up and throwing object in the environment). As you know the object will be on top of the plane and the virtual hands will be floating, for our HCI to be effective we had to make sure that the height between the plane and the floating hand was minimal so that we where able to easily pick up the object and throw it into the environment. We were able to make sure that the plane height from the floating hands was at minimal distance thanks to the plane positioning tools that allowed us to position the plan at the right position and make sure that the there was no problems related to height when trying to grab the object.

Another simple way to measure our effectiveness of our HCI is by making sure that distance between the object and the virtual hands is minimal so that there is no difficulties when trying to reach for the object. We made sure that the distance between the object and the virtual hands are minimal through the use of the 3D Object positioning tools that allowed us to make sure that distance between the floating hands and the object was minimal so that there would be no problem when the user tries to pick up the object as most of the time if the object is way to distant from the floating hands it becomes very hard to get hold of the object as the user will have to stretch his arms to try in reach for the object, this then make the HCI less effective. Luckily we were able to avoid this problem thanks to the 3D shape positioning tool that allowed us to position the object at a optimal place.

D1- evaluate HCI developed

I believe HCI is the future of computing and the HCI project that we have created just proves our point of how much potential is there to be still to be discovered, however right now I think we are at the core years where the HCI is actually being developed. We cannot consider the HCI to be at it’s early stages nor consider the HCI to be at its finishing stages as I believe we are in the middle where we are trying to develop smooth HCI’s that will helps us in the future.

The AI: MORE THAN HUMAN convention that we went to reinforced more my conception of AI going to be an integral part of our life in the future, it also opened my eyes on the progress we made thanks to the convention we where able to see from what we had started to where we are and what is the future holding for AI and in my opinion the best is yet to come for AI.

This event was very informative and an eye opener this event made me realize that every big things or idea starts from small concepts or ideas, in fact the first AI concept was only created for the purpose to solve calculation and play chess against a human

The picture above just proves that, Alan Turing’s idea of AI was to create an electronic brain that was able to solve calculations and play chess with a human, this is a very simplistic concept that today each and every single device is able to do, however still again shows how far we have come from when we started. We started from simple a idea and a dream and now our devices are able to talk to the AI through human computer interaction like google assistant. These notes from Alan Turing made me deeply realize how nowadays we always thing so big, but the biggest technological revolution comes from the simplest idea.

In the picture to your left we see Alan Turing’s idea of a electronic brain come true when IBM came out with a chess playing computer Deep Blue , that was able to play chess with chess champions such as Garry Kasparov, this battle between the machine and the human was won eventually by the machine which was huge event and turning point in the HCI’s history as for the first time a human was beaten by a computer on a game that a human invented, this win proved to the world that machine were actually able to make complex calculations and predict human movements before hands as this machine was able to explore 200 million moves per second that the machine or human can make or might make.

This victory of the HCI made noise in the world as it showed how much of a potential HCI has if developed in the right way, as machine were able to do complex calculations far better then human in less amount of time, this however can be also considered to be the turning point in history where humans were slowly being replaced by machines in working sectors. Not only this date in the history proved that machine can beat humans but also proved that deep learning and machine learning was effective in creating HCI and creating better products that can learn or predict from or mistakes and better themselves every time which humans are not capable to do.

Thanks to a simple chess game between a machine and human we were able to come to simple conclusion that if we focused our power and energy into deep Learning and machine Learning we could achieve more from a AI then a simple chess game and this concept of being able to achieve more from AI driven us to today where Human Computer Interaction is occurring on daily basis where the machine is able to learn from human behavior and it’s own behaviour and not make the same mistake twice and on the same time deep learning allows the HCI to be able to think by it’s own and come out with solutions without user input or any kind of instruction. Thanks to deep and machine learning we have HCI that are able to understand humans and have human type conversation with us for example google assistant or Cortana, who are learning everyday more and more from the user and it’s behaviour to better their self without any user input or instructions.

One example of how human are interacting successfully with AI can be the robot dog which is able to have full conversation with humans depending on what is the human input or movement is and this again is possible thanks to deep learning and machine learning which allow the AI dog to understand and analyse the human input and then based on that specific human motion input the dog replies back correctly.

However I believed the ultimate goal since the beginning of time for AI was to create a human robot which is able to perform tasks that actual human perform, decide what is good and what is wrong and able to have actual conversations with humans, where the user feels that he is able to feel that he is having an actual conversation with another human, at the convention centre we were able to see the early stages of human robot creations below is the picture of hand that was prototype at the early stage of human robot creation

However I think that we are closer then ever in the finalisation of an actual robot which looks and feels like a human and thanks to the huge advancement of deep learning the robot is now also able to have proper realistic conversation with humans, below is the picture one of the concept AI human robot

As I mentioned before, HCI has come a long since when we started and we are currently at the crucial developing stage of the various HCI which I believe in 10 to 20 years time will be complete and be an integral part of our life. I believe that now HCI will be developed at faster rate than ever before as HCI developing languages and equipment are not that complex or expensive as it was once upon a time basic computing knowledge, basic computing equipment allows you to start programming a HCI software.

One of the biggest evidence of HCI programming becoming more easy and affordable is by me being able to create an actual HCI program with a simple computer and affordable equipment which 10 to 20 years a go was impossible, by making HCI available to more people, it is getting more exposure to the world than ever before and more ideas are flowing inside the HCI industry which means more progress is being made in less time.

For our project we used a Leap motion to create a game , the program we created was taken from a very simple concept which was of us being able throw to a grenade or object in the virtual environment through the input of hands motions like in games such as Call Off Duty and I can positively say that we were successfully able to create the game we had planned . The developing process of our software was not complicated at all as we did not have to use any complicated computer language, however the difficult part was that we had to make sure that the positioning of the object and equipment in the virtual environment was correct, as if the positioning of the virtual equipment’s such as the sphere, the plane or the floating hands was a slightly wrong it becomes very hard to interact with the HCI. When interacting with our game another problem that we encountered was physics as there were some times in the developing stages where we were not able to pick the object or throw the object, this was entirely due to different physics options not being successfully activated, this then led us to make sure that when coding for the physics we rightly code the physics section as if the physics section is not coded properly we will not be able to correctly interact with the game. Overall I think that the game we created meets the targets we had set before starting the development stage of the game . Our target was to create a game where the user is able to pick up a object and throw it into the virtual world (through the detecting of movements by the leap motions), I can successfully say that our program is successfully able to do that as the game is very responsive to user motions which means if the user makes the grab movement the virtual hand is able to grab the object successfully and if the user make the throw movement with his hands the virtual hand will successfully throw the object into the virtual world in the direction directed by the user and depending on the force exerted by the user in the throw the ball distance traveled will vary.

Therefore I can successfully say that our game has successfully met the criteria that we had set at the beginning of the development process

I believe right now leap motions are being used like the Kinect as both of them are similar and used to create games where human motion is essential. However I believe that leap motion is way more versatile than a normal Kinect, because leap motion can be programmed for different functions, even though leap motions are being currently used to make simple programs or games. I believe in 2 to 3 years we will be able to create more programs with leap motion such as interactive menus that works without the need of any keyboard or mouse input but they only work through human movement. Leap motion is again another example of how much potential HCI has , I believe one day equipment’s like the HCI will become essential parts of our life and make our quality of life better as deep learning and machine learning advances AI will get better and HCI will perform way better then it is performing now, Human computer Interaction will be integrated in our life like never before and i believe there will be a point in time where the AI and HCI will outperform humans in every aspect of life. I think that we had our first prove when in 1997 the IBM’s deep blue chess playing computer out performed a human in a game that was actually created by a human, which showed again how much potential AI had and right now we are actually tapping into that AI’s potential and while we develop the AI it comes natural to also better the HCI that the AI will have, because at the end of the day AI is being created to better the human’s quality of life and to do so that AI must be able to interact with humans effectively. Even though we are the one programming the HCI of devices currently in 5 to 10 years time as machine learning and deep learning advances I think the AI will be able to develop at the best of their ability their HCI by their own automatically by learning from trends, statistics and mistakes, which some of the AI are currently in the market are not able to do, but we are getting there where AI is becoming more and more independent and it is starting to not need human input or help.

However like any tech equipment it has also it’s negatives, I believe with the advancement of AI and the HCI getting better and better as days goes by we will be relaying more and more on AI and their HCI to solve the most simple of the jobs, by doing so over the years we will be become more and more less able to cope with tasks, as you might know in some way we are already seeing the side effects for example few years a go by the O2 network going down the whole bus system network went down and it put the whole London transport system into a halt for several hours, this kind of problem will occur more and more in the future due to us integrating more and more HCI into our lives which means if the HCI goes down we will be not able to perform tasks. Another disadvantage I think that the advancement of AI and HCI will bring is the loss of jobs as you might have notice already a lot of jobs places are being replaced by robots which are able to do the same job the human does but 10 times better and over a longer periods of time, this has lead car industry replacing humans with robots for more productivity and the same thing goes for the shopping malls which are replacing humans with self-service cash machines which understand human interaction and allows the human to check out much faster, i believe this kind of trend will increase more and more putting employee out of work as robot will be able to do a employee job 10 times better then the human, this is most likely also going to increase the unemployment rate in the world, as human gets replaced more and more by machines.

Overall I think the process of HCI being integrated in our life has already started as we are interacting with HCI in a daily basis, however i still believe that we are still in the developing stages, and in 10 to 20 years time HCI will be a well integrated equipment in our life that we will interact with it on a daily basis. I believe with the simplification of the HCI program construction languages and the cheap prices of the HCI equipment and software is helping more people to get involved with HCI development which means more brains and more ideas are flowing in the HCI industry which will help us to create more effective HCI that will help to better the quality of life of the human, and believe that as we develop more deep learning and machine learning HCI will be more and more needed and used by human, this is a good thing as HCI will help us in every parts of our life’s, however I also feel that the constant development of HCI will make humans more dependent on HCI and computers then ever. Overall as I stated before we are in the developing years of the HCI we are not in the starting days nor close to perfecting HCI, we are in the development stage and the more we develop HCI now the more sooner we will be able gain perfect HCI’s and the more sooner they will help us to better our quality of life .

Bibliography

- Karen Anderson,information technology btec level 3 unit 23 human computer interaction,2011 , pag 122-153, pearson, United Kingdom

- Tech Target, https://www.techtarget.com, accessed on 11/3/19

- Webopedia, https://www.webopedia.com, accessed on: 12/3/19

- Techwalla, https://www.techwalla.com accessed on: 11/3/2019

- interaction design fundation, Human-Computer Interaction (HCI), https://www.interaction-design.org/literature/topics/human-computer-interaction, accessed on: 10/3/19

- Human information processing HIP link to video: https://youtu.be/k5Dlsrs-85Y

- Stephen Hawking’s voice and the machine that powers it, link to video: https://youtu.be/OTmPw4iy0hk

- user experience tutorial: fitt’s Law, lynda.com link to video: https://youtu.be/95RoKSFyQ_k

- voice summit, voice blog, How Voice Tech Is Slowly Including People With Speech Impediments date: Nov 1, 2018 1:02:00 PM by: j.madeiros link:

https://www.voicesummit.ai/blog/how-voice-tech-is-slowly-including-people-with-speech-impediments accessed on: 28/03/19 - forbes, The amazing Ways Hitachi uses Artificial Intellignece and Machine Learning, Bernard Marr, Jun 14 2019, link: https://www.forbes.com/sites/bernardmarr/2019/06/14/the-amazing-ways-hitachi-uses-artificial-intelligence-and-machine-learning/#275d4d563705 accessed: 1/6/19